AI, malpractice and the future of physician liability

Sara Gerke, David A. Simon, J.D., LL.M., Ph.D., and Deepika Srivastava join the show to talk about malpractice and physician liability in cases involving artificial intelligence.

They explain how AI could redefine the standard of care, what happens when an algorithm contributes to patient harm, and practical steps physicians can take now to protect themselves — including documentation, communication and clear internal policies.

Check out our October cover story for a deeper look at how AI is reshaping medical malpractice: "

Don't miss our recent episodes on

Music Credits:

Groovy 90s Hip-Hop Acid Jazz by Musinova -

Relaxing Lounge by Classy Call me Man -

A Textbook Example by Skip Peck -

Editor's note: Episode timestamps and transcript produced using AI tools.

0:00 – Opening: The AI malpractice paradox

Why using too little or too much AI can both create legal risk.

0:13 – Episode setup

Austin introduces the guests and frames the core question: How does AI shift malpractice liability?

1:14 – AI and the standard of care

Gerke explains how jurors already view AI-guided decisions as potentially “reasonable.”

2:07 – Adoption drives legal expectations

Simon outlines how widespread use — not hype — determines when AI becomes mandatory practice.

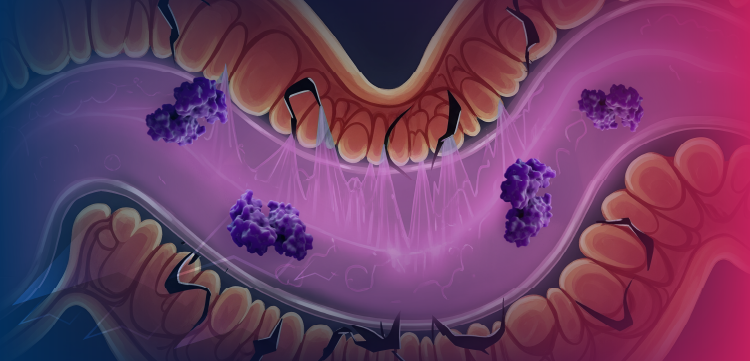

4:03 – When AI harms a patient

Gerke on physician and hospital exposure today — and surgeons’ skepticism of manufacturer liability.

5:51 – Regulated devices enter the chat

Why manufacturers get pulled in when AI tools behave like medical devices.

6:04 – Device pathways and lawsuits

Simon details 510(k) vs. De Novo vs. PMA — and how each influences manufacturer accountability.

8:27 – Policy levers

How FDA and state decisions could shift responsibility upstream.

9:25 – Insurance reality check

Srivastava: Physicians still bear primary legal risk since they make the clinical call and sign the chart.

10:29 – Transition: From risk to action

Before pulling the plug on AI tools — what physicians should actually do.

10:43 – Practical protections

Srivastava’s immediate steps: informed consent, chart review, governance, and patient disclosure.

12:01 – Transparency as defense

How clear communication about AI use strengthens trust and reduces exposure.

13:20 – Training + governance gaps

Keeping workflows tight matters just as much as clinical judgment.

14:02 – Vetting tools and contracts

Simon: If AI claims accuracy, ask for validation — and liability protections.

15:30 – Labeling matters

Gerke calls for food-style transparency labels for AI devices.

16:27 – The “learned intermediary” burden

Even with better labels, liability flows back to the physician.

17:01 – P2 Management Minute

Quick interlude from Keith Reynolds.

17:54 – Rapid compliance playbook

Five habits that will hold up in court — whether you follow or override AI suggestions.

18:40 – Closing

Why AI is here to stay — and why documentation discipline must evolve with it.

19:10 – Outro

Credits, subscription reminder and link to October cover story.

Newsletter

Optimize your practice with the Physicians Practice newsletter, offering management pearls, leadership tips, and business strategies tailored for practice administrators and physicians of any specialty.